Beyond 10% vs 15%: a testing framework for smarter promotions

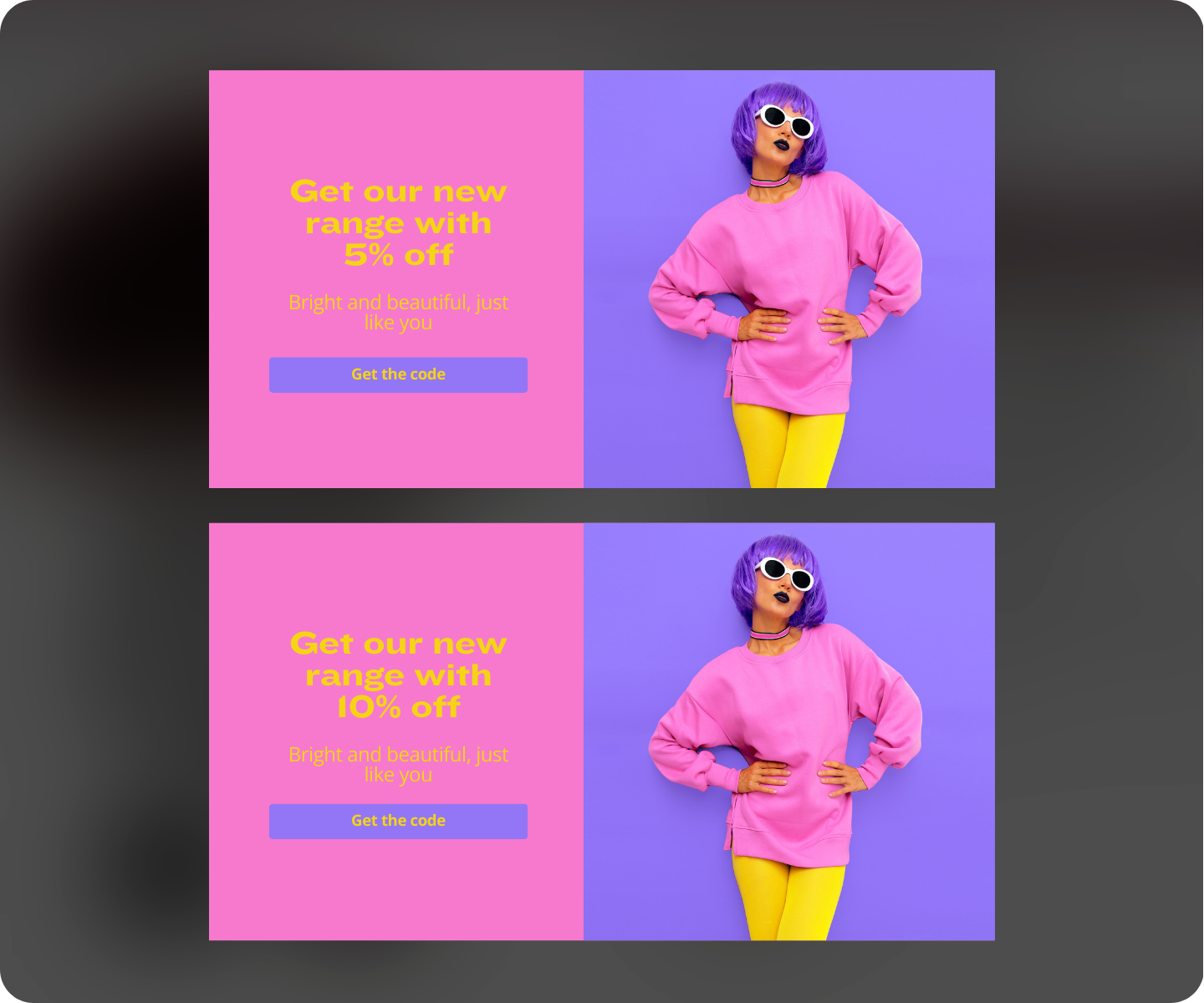

Most promotional A/B testing follows the same formula.

Test 10% off against 15% off. See which converts better. Roll out the winner.

It's better than guessing, but it still misses substantial opportunities.

Discount percentage is one variable. Timing, triggers, offer format, and audience also shape whether a promotion drives profitable conversions or just teaches customers to wait for deals.

Here's a practical framework and the key takeaways for effective testing: focus on variables beyond basic discount levels, identify what truly drives eCommerce CRO, and use this as your guide to set impactful testing priorities.

The problem with discount-only testing

Testing only discount levels asks: how much must we give away to close this sale?

A more useful question: who needs an incentive, when, and which offer changes behavior?

Research from Baymard Institute shows that the average cart abandonment rate is 70.19%. The most common reason? Extra costs like shipping appearing at checkout, cited by 48% of shoppers who abandoned.

That tells us something important. Sometimes the winning "promotion" isn't a bigger discount. It's removing friction at the right moment.

The four dimensions of promotional testing

Effective eCommerce promotions rely on precision, not just size.

Here are the four variables worth testing beyond the discount percentage itself, and the key learnings from each.

1. Timing: When does the offer appear?

The moment an offer appears, customers' perceptions change.

Show a discount immediately, and the relationship anchors to price. Delay it, and customers engage with your products first, potentially converting without incentives.

Tests to run:

- Immediate offer on landing vs a delayed offer after 30 seconds of browsing

- First page vs product page vs checkout

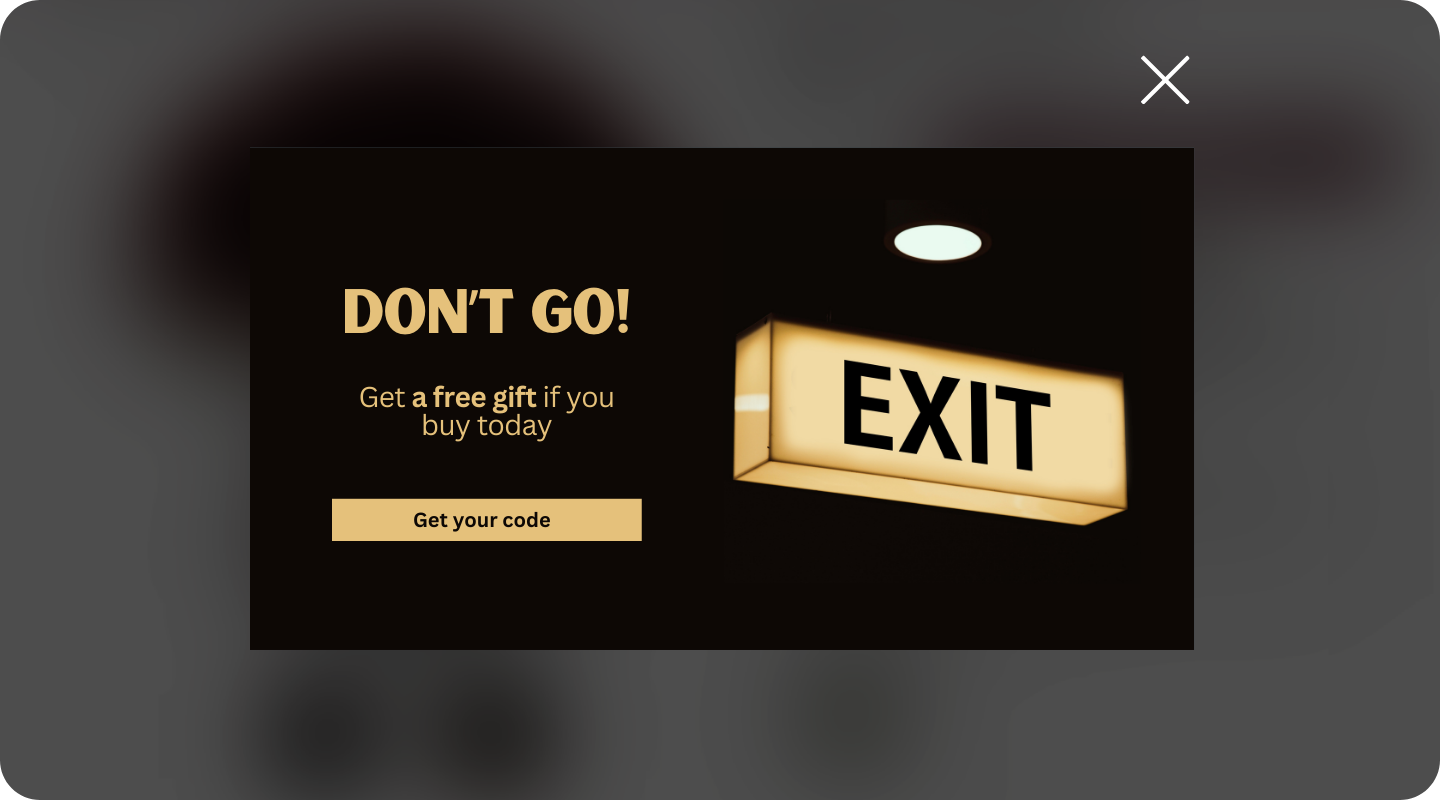

- Early engagement vs exit-intent timing

Exit-intent offers are particularly interesting. They target customers who've demonstrated interest but are about to leave, meaning you're not discounting to people who would have bought anyway.

2. Triggers: what behaviour unlocks the offer?

Not all cart activity signals the same intent.

Someone with £150 in their basket is in a different mindset than someone browsing with £30. A returning visitor behaves differently from a first-timer.

Tests to run:

- Cart value thresholds: offer at £50 vs £75 vs £100

- Session count triggers: first visit vs third visit

- Product category triggers: different offers for different departments

- Browse abandonment vs cart abandonment (different intent levels)

Threshold-based triggers incentivize larger baskets and protect margin on small orders.

3. Offer format: same value, different presentation

A study by Deloitte found that 58% of consumers add extra items to their cart to qualify for free shipping.

Meanwhile, research shows free shipping can reduce cart abandonment by up to 48% compared to baseline scenarios.

Offer format matters as much as monetary value.

Tests to run:

- Percentage off vs fixed amount (15% off vs £10 off)

- Free shipping vs discount equivalent

- Free gift vs monetary discount

- Tiered offers vs flat discounts

- Bundle incentives vs single-item discounts

Fixed amounts often feel more tangible on lower-value purchases. Percentages work better on higher-ticket items. Free shipping removes a specific friction point that percentage discounts don't address.

A footwear retailer selling £80 trainers could test "£10 off" vs "free delivery" (worth £4.99). The shipping offer might convert better, even at a lower cost, because it removes the abandonment trigger rather than just reducing the total.

This is where personalization becomes powerful. Different offer formats resonate with different customer segments. Testing helps you discover which works best for your audience and category.

4. Audience: who receives the offer?

This is the most overlooked variable.

A blanket discount treats loyal customers and first-time browsers equally, offering the same incentives to both.

Tests to run:

- New vs returning visitor offers

- High-value vs low-value customers (based on purchase history)

- Discount-code users vs full-price buyers

- Mobile vs desktop visitors (different conversion patterns)

A fashion retailer could test offering 10% off to new visitors while showing returning customers a "free express delivery" upgrade instead. The returning customer already trusts the brand and may not need a price incentive to convert.

This is where eCommerce platforms with robust segmentation capabilities shine. The key takeaway: the goal isn't to stop offering discounts, but to stop offering them to people who don't need them.

Building your testing roadmap

With four dimensions to explore, where do you start?

Prioritize by impact and ease:

- Start with timing and trigger tests if you're currently showing offers to everyone

- Move to audience segmentation once you have baseline data on different customer types

- Test offer formats when you want to optimize for specific goals (margin vs conversion vs AOV)

One variable at a time:

If you test a new trigger AND a new audience segment simultaneously, you won't know which change drove the result. Isolate variables to build real understanding.

Sample size matters:

Run tests until you reach statistical significance. For most retail sites, that means at least 1,000 conversions per variation and 2-4 weeks of data to account for weekly patterns.

Measuring beyond conversion rate

Conversion rate is the obvious metric. But it's not the only one that matters.

A promotion that lifts conversions by 20% but attracts only deal-hunters who never return isn't a win.

Metrics to track:

- Conversion rate (the baseline)

- Average order value (are customers spending more or less?)

- Margin per order (what's the actual profit impact?)

- Promotional cost per acquisition (how much did this sale cost you?)

- Repeat purchase rate (are you attracting loyal customers or one-time bargain shoppers?)

The goal of promotional optimization is key: not just more conversions, but more profitable conversions. Always measure impact on both revenue and margin.

Start testing what matters

The 10% vs 15% test isn't wrong. It's just incomplete.

Top retailers test not just discount levels, but also timing, triggers, formats, and recipients.

Test all major promotion variables—not just discounts—for maximum impact.

(Your finance team will thank you.)